AGI? Rather AGU - Artificial General Unintelligence

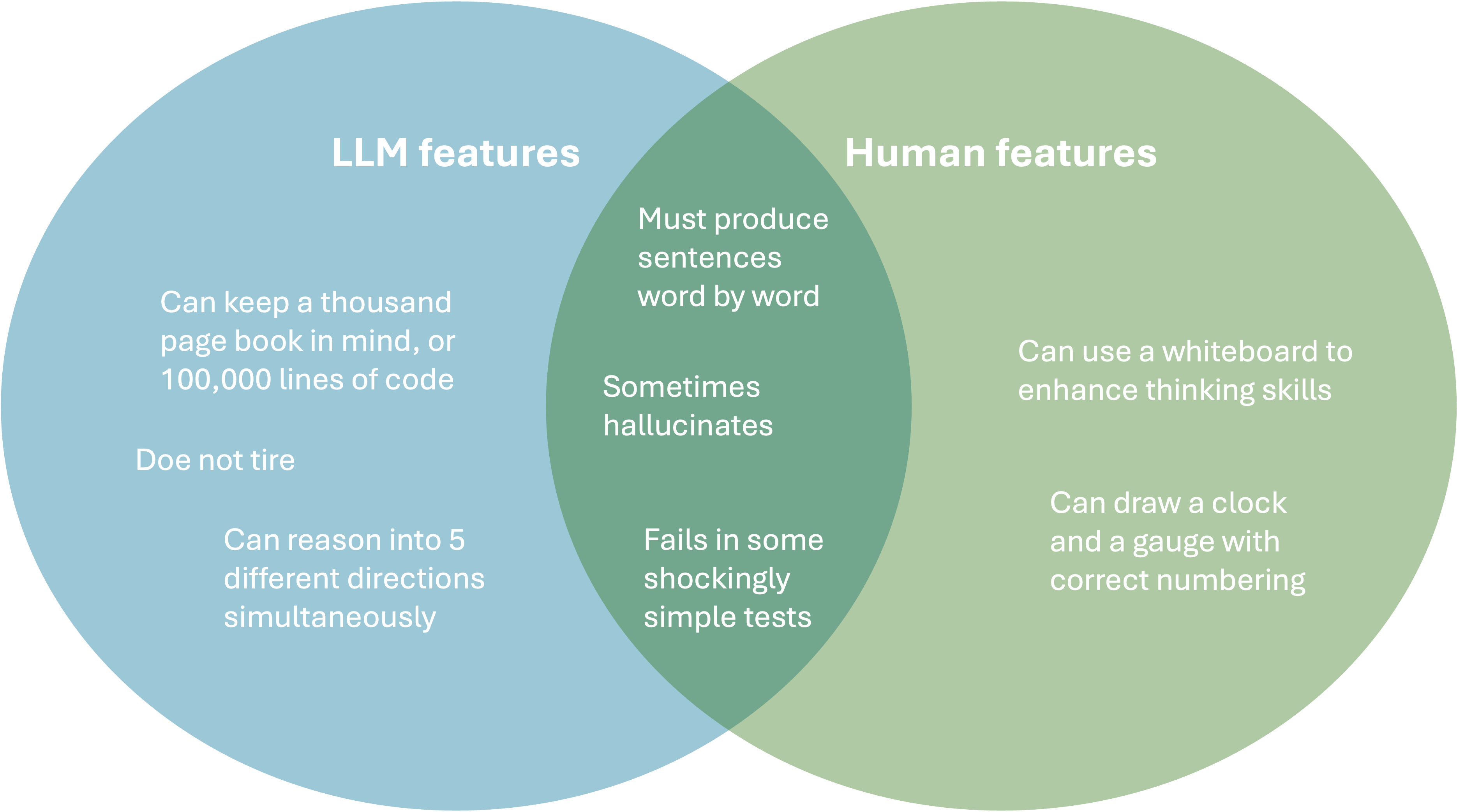

Recently I spent some time drawing a Venn diagram of the capabilities of current LLMs and humans. It turned out differently than I thought:

In terms of capabilities, there are striking differences between LLMs and humans. Current LLMs now feature a context window of one million tokens - that is roughly 2,500 pages of a book. And they know this content by heart. Unimaginable for a human.

Also, no matter how many dumb questions you ask, LLMs will never get tired or annoyed. They can answer the same question a million times without losing patience.

Another striking feature of current chain-of-thought reasoning LLMs is their ability to (if you can afford it) reason parallely into multiple directions.

Humans, on the other hand, have the incredible ability to use tools like whiteboards to enhance their memory and reasoning. This boosts our lowly intelligence quite fundamentally - no major advance in science can be imagined if the scientists had to rely on their own memory alone.

And humans have a fundamental understanding of how the world works, what rules govern it. They understand that a gauge's or analog clock's numbers continuously increase and that the same distance on such a gauge always represents the same distance. Unless it doesn't because of a lograithmic scale or something similar. AIs are notoriously bad at this. Trust me. I tried to create clocks. Again and again.

Interestingly, I found it much harder to find cool features that humans and LLMs have in common than to find issues we have in common (and that normal, algorithmic software doesn't have):

- When uttering sentences, both huans and LLMs must produce them word by word. Try to say a sentence and skip 3 words you wanted to say. Impossible. Just like LLMs produce sentence token by token. Interestingly, for me the same applies to music. Sometimes a song is being played that I know, but where I just can't remember the title. I must mentally fast-forward through the song until the title is being sung. I cannot just skip to the title.

- Sometimes humans and LLMs hallucinate. You typically don't remember it, but trust me, your memory fails you a lot (see also the great book "The Memory Illusion" by Julia Shaw), and then your mind makes stuff up. LLMs do the same, but we trust them less and check more often. And publish every single hallucination we find.

- Both humans and LLMs fail at shockingly simple tests. The literature is full of gruesome reasoning failures of humans. There's dozens of wrong mental shortcuts we take, leading to all kinds of cognitive biases. And we have no understanding of exponential growth, no matter how hard we try. I understand the root cause of LLM hallucinations are different - the most plausible continuation under too limited knowledge is often making things up. But the result is the same: we both fail at simple reasoning tasks.

Conclusion

It seems that what unites us is our failures. And our skills are so vastly different that, maybe, it is a bad idea to try to evolve LLMs into AGI, i.e., to strive for artificial general intelligence by mimicking human cognition. Instead, perhaps we should focus on leveraging the unique strengths of both humans and LLMs, combining them in ways that complement each other's abilities. This approach could lead to more effective and reliable systems, rather than chasing an elusive goal of human-like intelligence in machines.

Okay, that was what the LLM concluded. What I actually wanted to write: maybe it is a bad idea to strive for an AGI, an AI with a superset of our skills and capabilities. As Ray Kurzweil wrote in "The Singularity is Nearer", AIs are very alien intelligences. Let's not try to make them more human. Let's evolve them into partners that complement our skills and capabilities.

Coding

Let's take Coding as an example. By now, LLMs are fairly good at writing code, but, again, in a very different way than humans. They can produce code much faster than we can, and on a low level this code is typically more correct than ours. I have never seen an LLM forget to increment a loop counter, for example. And they do not tire writing large pieces of code. This makes "AI coding" a different problem to solve differently than "human coding", where every line is mental effort that will drain you, and where the more experienced of us spend time upfront getting a mental model of how the whole thing should work and why this will solve the problem at hand..

So a good way to code with LLMs is to have a huge set of automated tests to verify the code. Then you can let the LLM agent change code, test it, reason on what's wrong, change it again, and so on, until it works. And if it faily you change the spec, revert to the last git state, and start over. You could also dream up an IDE in which 5 LLMs work on a task independently, and after each step the solution that progresses fastest is selected to continue. None of this would make any sense for a human.

The optimal way to code with and without LLMs is different, and I predict that it will diverge even more in the future. Trying to come to an LLM coder that works slow and methodical like a human is not necessarily the best way to go.

And more

I am not a phyicist. Nor am I a chemist. But I can imagine that the same applies to these and other fields of science. Trying to head for an AGI is wasteful, trying to expand AI skills into the direction outside of human skills is a better way to go.