Building apps with LLMs that need LLMs

Mind-twisting

Would I have thought a few months ago that I’d use an LLM to write a specification with which I ask an LLM to build an app in which I ask an LLM to evaluate a player’s move? Probably not. But that’s how it happened:

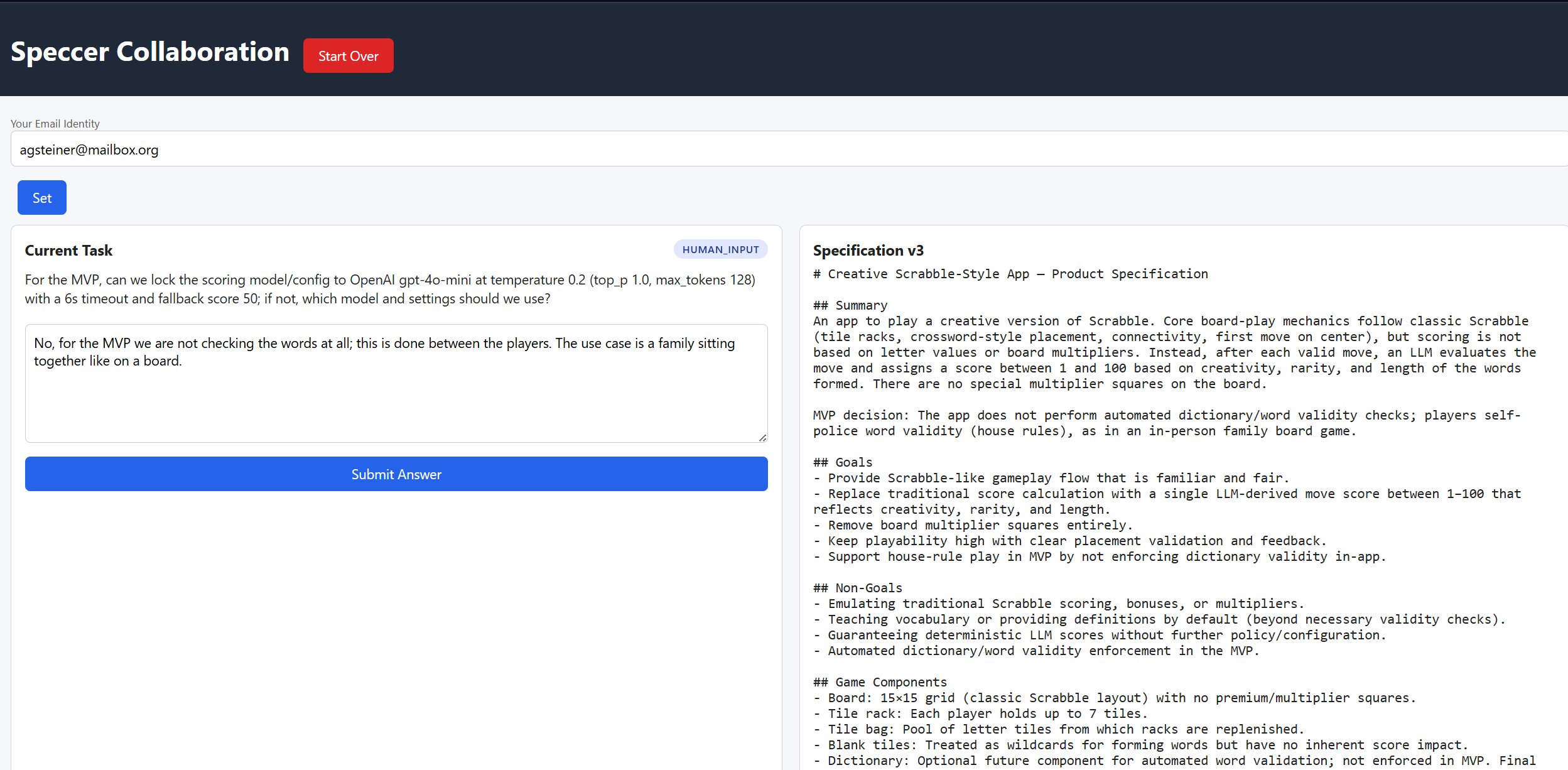

Part 1: "Speccer" - Collaborative specification of apps

An app that surprisingly even works: collaboratively generating specifications that are to be used as a prompt to create an app. The idea is: multiple authors register with the app. After an initial description of which app you want to specify, there’s a long cycle:

- LLM generates the first spec, which each of the authors can see.

- LLM generates a set of questions that still need to be answered until the specification is far enough along that an LLM can build the app.

- All authors answer the questions.

- LLM builds a refined next version of the spec based on the answers.

Then back to 2.

I only developed what I needed myself, i.e., Speccer can only work on one specification at a time, there’s no real user management, no passwords, no undo.

But: it works great.

Try it yourself: https://github.com/kagsteiner/Speccer/tree/main

You need a Node.js installation and a little bit of developer knowledge. And above all an OpenAI API key

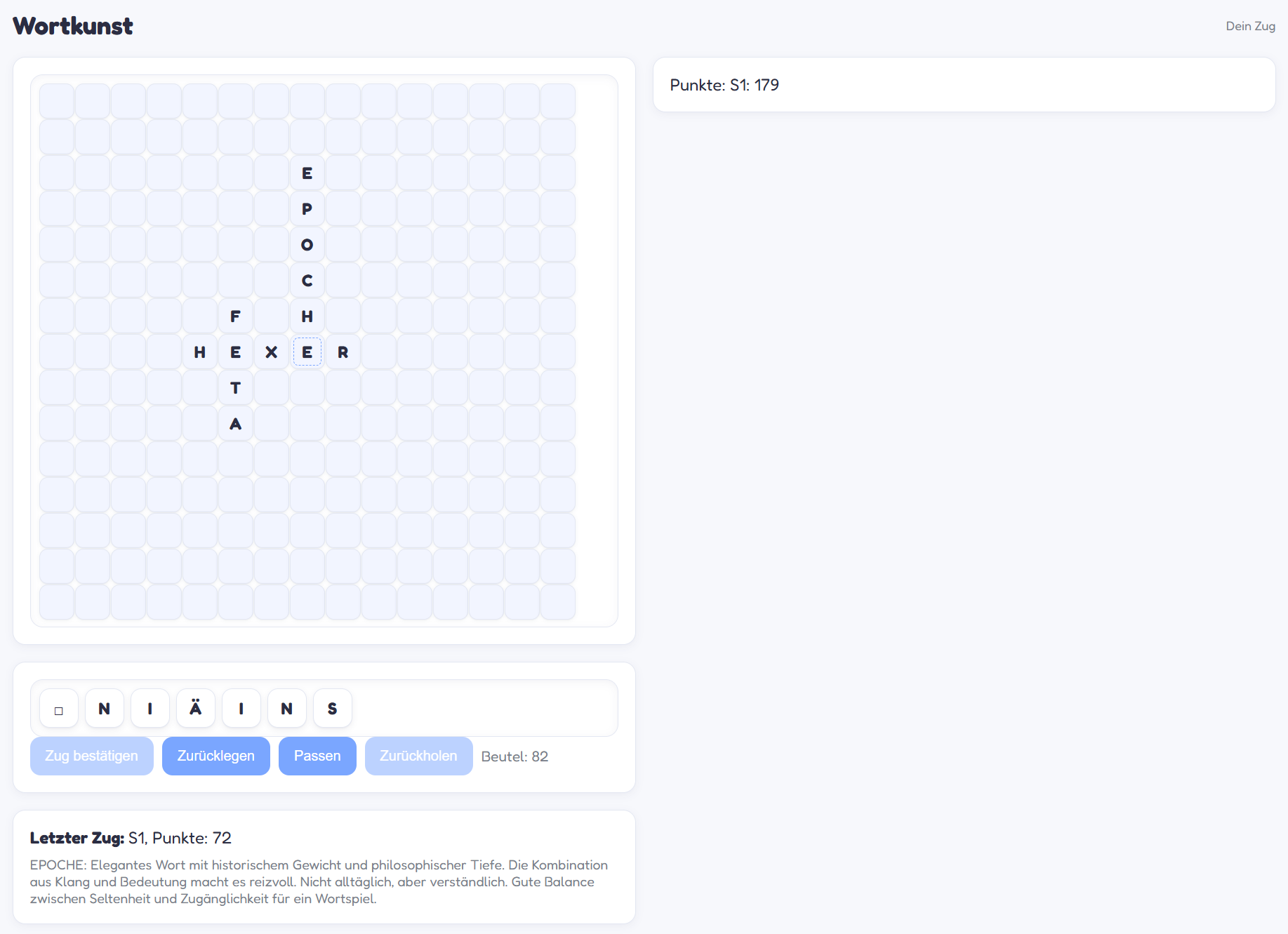

Part 2: "Wortkunst" - a creative Scrabble variant

The other day while playing Scrabble I once again got annoyed by the concept that, due to the double/triple score squares and letter points, favors an unpleasantly tactical style of play. What you really want is to lay beautiful words. Long words. But that often gives your opponent the chance to cash in big time.

So, I fired up Speccer and specified a variant where you leave scoring to an “AI oracle” that rates the found words with a score from 1–100 based on creativity, rarity, and beauty.

After three rounds of sometimes quite technical questions about details like the exact matchmaking, the spec was done. ChatGPT had to fix things twice—first the “Start Game” button didn’t work, then the Mistral LLM returned its JSON response in triple quotes. One more little design tweak and the app was done.

Sure, it could be made prettier but hey, from “idea in my head” to this result in a total of 2 hours. Not bad.

Then it took another 30 minutes to get the game running on my local Synology (which is not on the Internet), now I can try it with the family. (a free-to-play game that costs me money per LLM call isn’t something you casually put on the web...)

Mind-twisting Part 2

It’s pretty amazing how rapidly software development with LLMs is evolving. I would absolutely not recommend an approach like the above for professional applications that I sell and have to support, regardless of whether my LLM crashes. That can still happen. And when you’re faced with 100k lines of code, all vibe-coded by an LLM, and you have no idea how it works in detail—yeah, I think that’s the ultimate developer hell.

But for your own fun apps: great.