So it really doesn't know Backgammon very well

Yay, my Backgammon quiz is running. And it was a huge slog until it did—a good example of why you have to be careful with statements like “Anyone can develop software with the help of AIs.” Because in the end, after a few hours without progress, I was stepping through the code in the debugger with a helpless AI to find the bug “by hand.”

But from the beginning...

The Backgammon Quiz

I’m an avid Backgammon player. It started sometime in the 90s when I (seriously) hit my Toshiba notebook with my fist so hard that I had to buy a new one. A Backgammon program called Jellyfish annihilated me again and again in a simple game of luck called Backgammon—it was a tear-your-hair-out situation (or a “destroy the computer” situation). Maliciously, the full version of the program cost 200 DM. You get annihilated for free, but finding out what the right move is costs you a chunk of money.

Since then I’ve known that while Backgammon is a game of luck, finding the best move is an art, and even the simplest positions require complex probability calculations. Yes, I’ve read about 10 Backgammon books, but I don’t get past the threshold where you consistently make per-move errors that lose less than 1/50 of a game (roughly speaking).

So I had the idea for a Backgammon quiz. The app analyzes all my completed matches on the Backgammon server called dailygammon, remembers all my mistakes, and in the positions where I moved incorrectly, it presents my move, the best move, and a few other moves in a multiple-choice quiz. I tap, and it tells me whether I’m right.

Sounds simple.

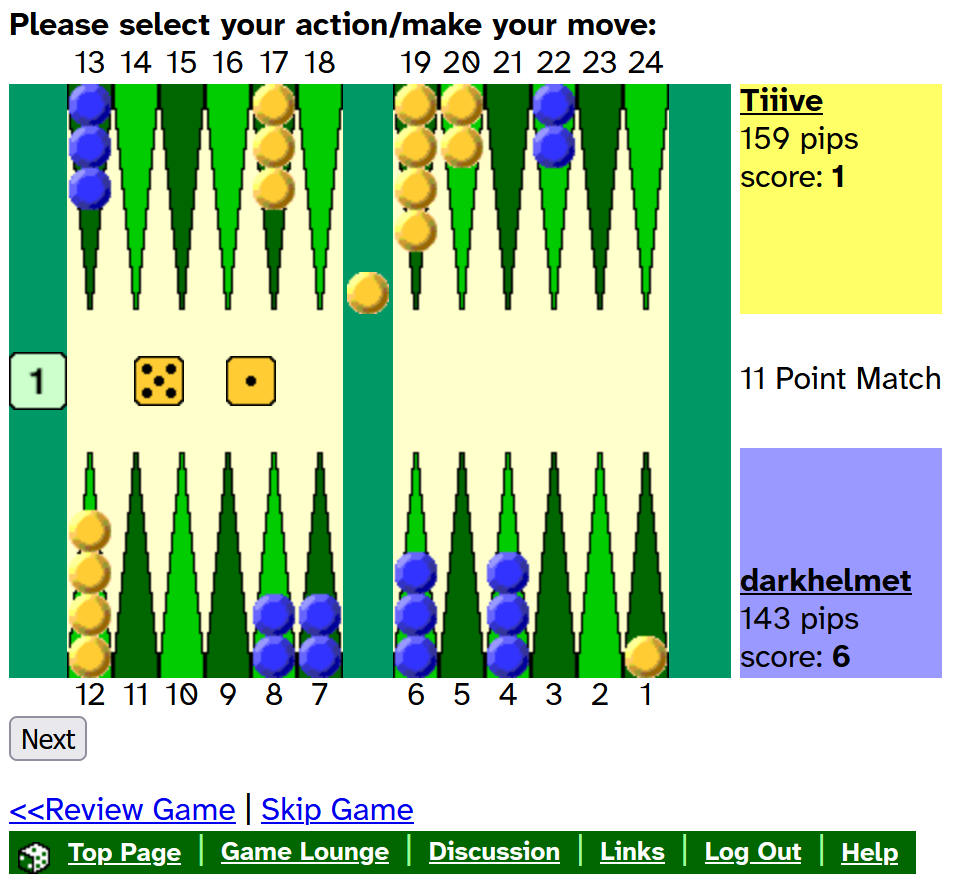

Dailygammon. Old but great.

Dailygammon. Old but great.

It isn’t, Part 1 – GNU Backgammon as Final Boss 1

But it isn’t. The app must

1. Log in to Dailygammon as a user

2. Parse HTML pages there and extract matches from a human-readable Backgammon notation

3. For every position of every match call the open-source software “GNU Backgammon” so it can run a Python script to analyze the position and save the result to a JSON file

4. If my move was a “blunder,” choose up to four quiz moves and save the position together with the moves as a quiz-question candidate

5. Draw a nice Backgammon board in HTML/JS, and have endpoints underneath it in node.js to collect moves and run the quiz

6. Implement the quiz logic

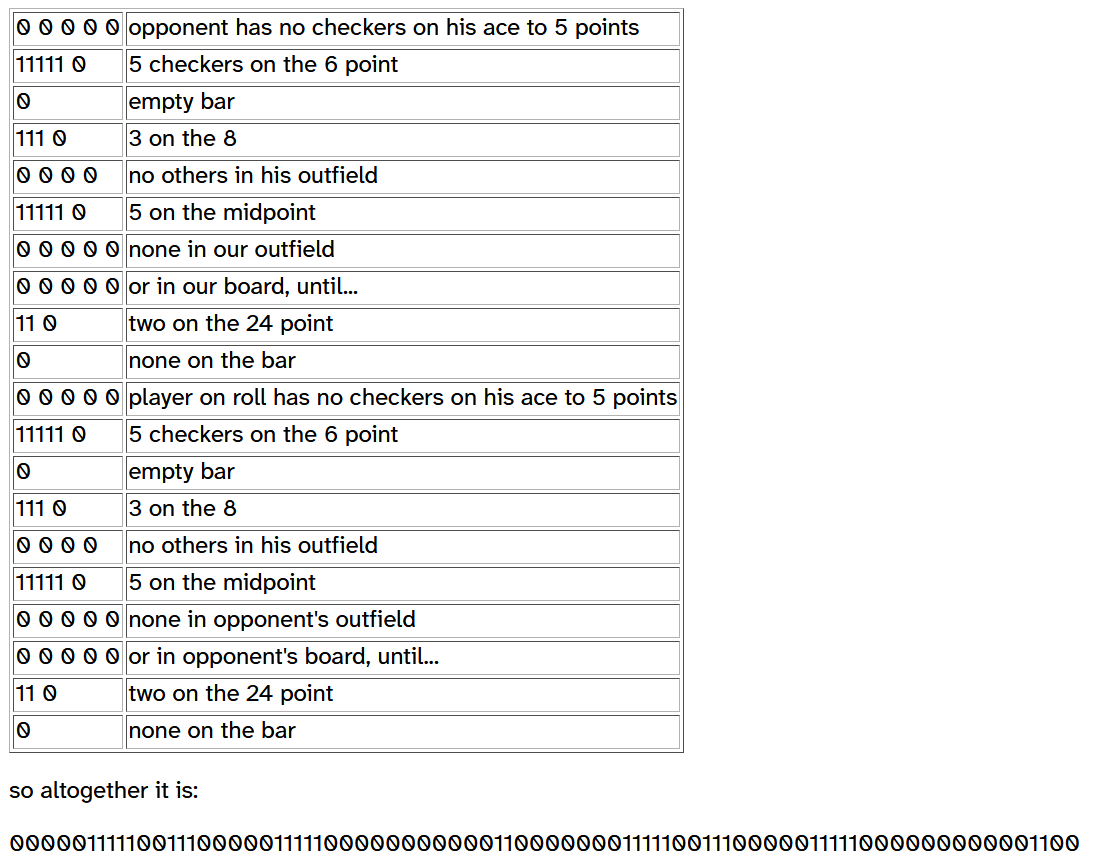

A key idea was to store the respective position in the match using the so-called “GNU ID.” That was probably my mistake #1. The GNU ID is very AI-unfriendly. It’s quite cleanly specified by GNU: https://www.gnu.org/software/gnubg/manual/gnubg.html#gnubg-tech_matchid

Extract of the description of the Gnu ID

Extract of the description of the Gnu ID

But what would have been a nasty but simple task for me—first encoding the position and match information in binary and then Base64—overwhelmed Cursor + GPT-5. For hours I found faulty IDs, analyzed them, made suggestions about what was wrong, looked at the fix.

My second, more serious mistake came into play here for the first time—namely my assumption that the LLM knows Backgammon well. But Backgammon is quite unintuitive for a machine. If you don’t know it: imagine sitting opposite your opponent with the Backgammon board between you. You do not move forward and back like in chess; no, your checkers travel from your perspective from the upper right to the upper left, then hop to the lower left, and finally reach the goal at the lower right. And when you point to a point, say point 18, that point is point 7 for your opponent (in general 25 minus your point). I now think that GPT-5 didn’t know all that, especially the different point numbers.

And so it happened that steps 1 and 2 were completed cleanly in minutes (paste HTML code, write down the request, done), but we sat on step 3 for days while the machine wrote test scripts to read, write, deconstruct GNU IDs, etc. Eventually that part worked, but things didn’t improve.

Part 2 – building a UI

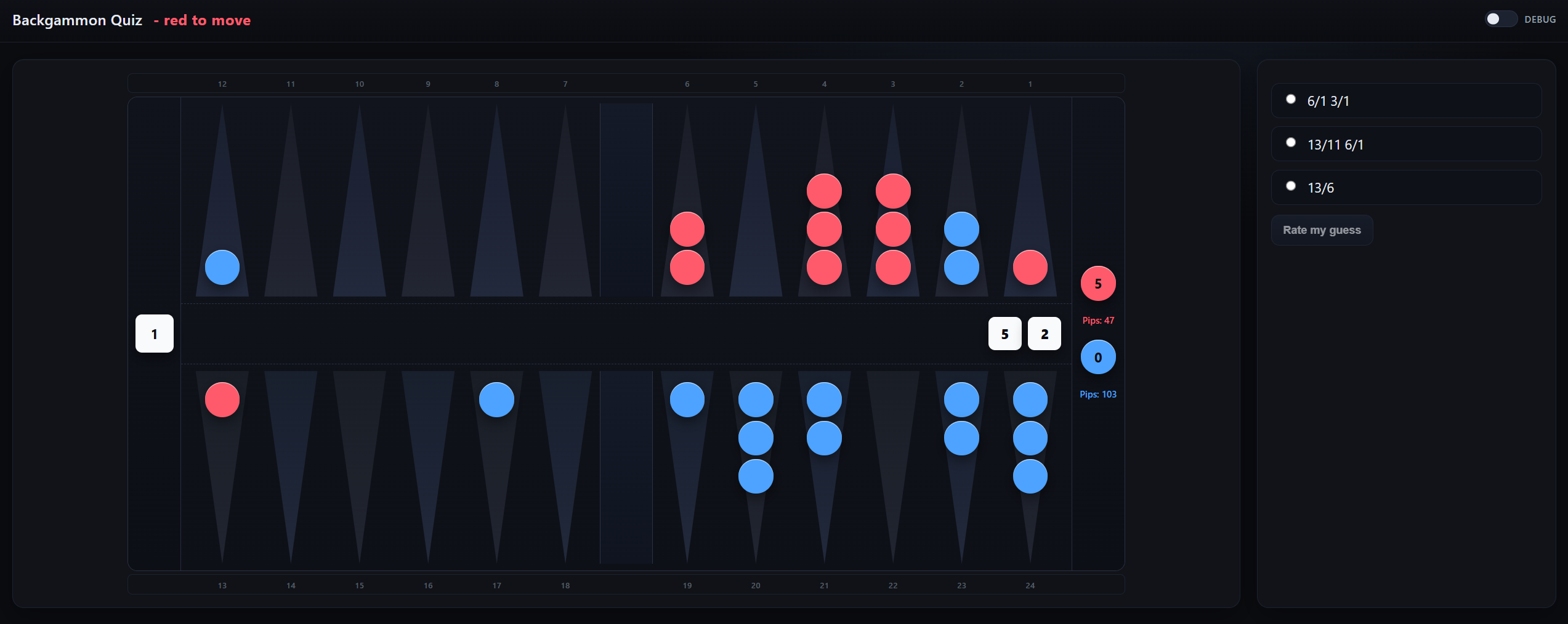

The UI along with the endpoints underneath it was built by the LLM quickly, as expected. There were a few misunderstandings. The “BAR” has to be vertical, not horizontal, and—this is where I first noticed it—the point numbers from the player’s perspective took several dialogues with the machine.

But it all went quite decently. And with some CSS magic that amazed me - I only know CSS basics.

The Quiz UI

The Quiz UI

Part 3 – the test. Again and again

And so I ended up getting my quiz faster than if I had hand-coded it.

The thing is: about 30% of the quiz positions were total nonsense. Illegal positions; moves that are impossible in the positions.

The “vibe-based approach” of showing the machine failure situations and having it analyze them made no progress. Hours passed without success.

In the end (insert a smug human superiority grin here) the human had to step into the debugger after all.

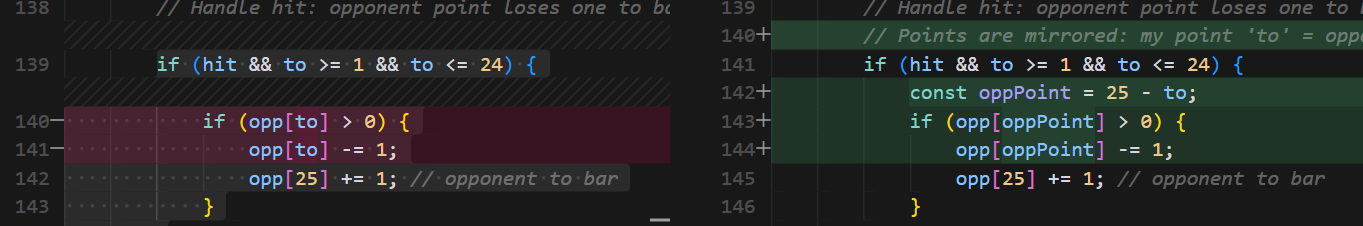

It turned out that the app implemented hitting a blot incorrectly because it didn’t grasp that its point 7 is my point 18. The app represented the board twice—once as 24 points occupied by my checkers or empty, and once the same for the opponent. So if I move, for example, from 23 to 18 and hit a blot there, I must decrease the checker count on 23 by one, increase it on 18, and decrease his checker count NOT on 18, but on 7 (and increase on 25, the special BAR point).

In the end, a completely trivial bug. But one that would never have been fixed without human development skills (by the way, I also approached the problem with Claude 4.5 Sonnet and the somewhat suspect-to-me GPT-5 Codex; they were similarly confused).

Introducing: The bug

Introducing: The bug

What did I learn?

Probably my biggest mistake was not extracting in advance the extent of the LLM’s Backgammon knowledge or specifying the game precisely. Better to over- than under-specify—that’s my main takeaway from the drama.

I was probably still significantly faster overall than if I had done it manually, but also significantly less annoyed...

The (insufficient) prompt as reference

Here is the beginning of the prompt. Note: I do not explain Backgammon. At all. Not a word. Dumb.

Let's create an app for users of the site "dailygammon.com". This site only uses HTTP to play off-line backgammon matches.

When a match is completed, the player can export it as a text file for later analysis.

Our app shall have the following purpose: A player of the web site "http://dailygammon.com" can use the App's UI to select a time range from today to n days in the past. Our app will then contact dailygammon.com to retrieve text files with all finished matches in that timeframe.

The app will also have a way to select a number n of positions.

Then the app will contact a backgammon engine, and analyze all these matches. It will gather the top n mistakes that the player was making in these matches. For each of the positions where the player made a big mistake, it will show a quiz where the player can select one of four moves - the best move, the player's actual move, the second best move and a random move. The player then can select the move and get the answer if he was right. The results shall be stored in a simple database so that the player can keep a library of positions he failed in for continous training.