THE SINGULARITY IS NEAR!

AIs are getting better and better, faster and faster

On my Mastodon profile on hachyderm.io I name 2029 as the year in which I expect the singularity. The singularity is the moment when AI becomes more intelligent than we humans, and then shortly afterwards continues to develop in rapid exponential growth to a level that is unimaginable for us.

The idea behind this is that as soon as AI is better at programming AIs than we are, it will no longer be a few thousand clever human software developers who are available, but millions of cleverer machine developers, who will be a bit more clever with each generation of AI developed this way.

If you look at the time between Opus 4.5 and Opus 4.6 and that between GPT 5.2 and GPT 5.3, together with the clearly increased ability to develop software, for example, you might think that it won’t take another 3 years, but maybe... one...?

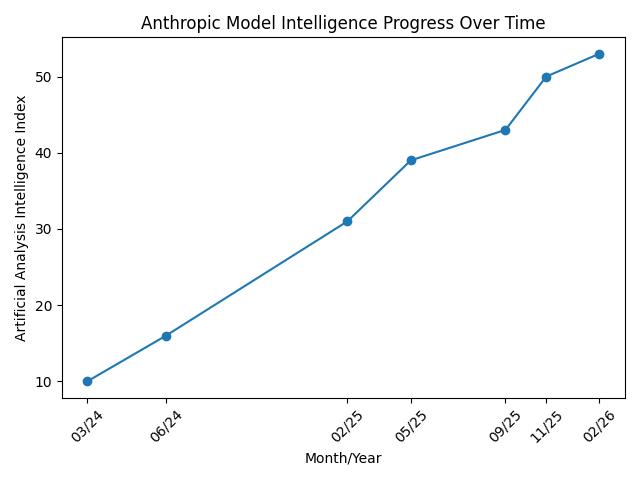

Sieht man sich an, wie sich die Score der einzelnen Modelle beim "Artificial Analysis Intelligence Index" über die Zeit entwickelt, sieht man auch ein kontinuierliches - wenn auch nicht exponentielles sondern eher lineares Wachstum:

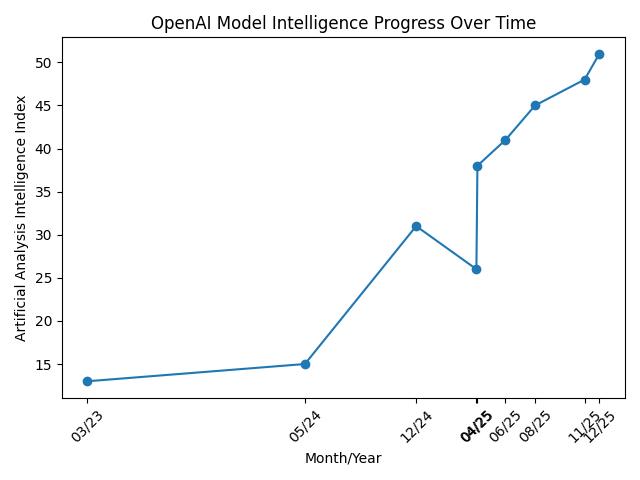

If you look at the growth of "Artificial Analysis Intelligence Index" score of Anthropics and OpenAI's model over time, you notice a continuous growth curve, not exponential but linear.

Anthropics model performance over time

OpenAI model performance over time

The weird hiccup of OpenAI is GPT-4.1, released in April 2025 and scoring only 26 points.

And even when you read the manufacturers’ obviously exaggerated comments about the involvement of the AIs:

OpenAI:

"GPT 5.3 Codex is our first model that was crucially involved in its own development. The Codex team used early versions to debug its own training, manage its own deployment, and diagnose test results and evaluations – our team was overwhelmed by how much Codex was able to accelerate its own development."

Anthropic:

"We build Claude with Claude. Our engineers write code with Claude Code every day, and every new model first gets tested on our own work. With Opus 4.6, we’ve found that the model brings more focus to the most challenging parts of a task without being told to, moves quickly through the more straightforward parts, handles ambiguous problems with better judgment, and stays productive over longer sessions."

then you can, even with the proverbial grain of salt, come to the conclusion: the AIs are supporting the AI developers in developing the next AIs in a way that is already accelerating this process. The path to the singularity has therefore already begun.

Other observations

On this article by a software developer another software developer writes about his very similar observations. His conclusion is – in my words – that the singularity is near, that it will massively change our lives, and that we should reconsider a few things. How do we want to learn, what new possibilities do we have, etc.

Marc Andreessen, long known as a technology optimist, also recently talked in a surprisingly interesting podcast (I don’t like Marc very much) about how the approaching wave of highly intelligent AIs will greatly accelerate those who actively engage, who build broad knowledge, while those who just carry on as before will have difficulties in the post-AI world: listen to the episode on Spotify

I have deliberately looked for skeptical views on AI. Even those often show, in unexpected ways, how rapid the progress currently is. For example, Forbes wrote at the end of December 2025 that the AGI hype was over because the latest models like GPT-5 had fallen short of expectations and now everyone was rowing back. The article was already outdated at the time of publication, its conclusions obsolete. Two months have passed since then, and compared to GPT 5.3 codex and Opus 4.6, the model named in the article as the latest, GPT‑5, seems like a model from the Stone Age.

Apart from that, it also turned out to be difficult to find skeptics whose articles are technically reasonably well-founded and up to date with such a rapidly changing field.

And then there are the AI-hypers.

Like the psychopathic richest man in the world, who has now founded a "Macrohard" department in his AI labs, always good for a pun, to use AI to, as people like to say in Anglicism, disrupt the software business. Like the stock analysts who sent the shares of many "Software as a Service" providers through the floor in January, partly assuming that soon you would just write "please write me a word-processing program that is better than Word", wait, and you’re done.

We are still a good way away from that.

My own experiments end with ambiguous findings. On the one hand: I asked ChatGPT 5.2 last week to convert a three-page markdown document into HTML. After a very good start, it simply stopped at 2/3 of the document. I was reminded of the ChatGPT 3.5 days.

On the other hand: I also asked OpenAI Codex + GPT 5.3 codex to program a personal news app for me that scans all possible known and relevant RSS feeds for me, has them translated and summarized by a local LLM, prioritized according to my importance criteria, and then presents me with news tailored to me from about 50 sources. An hour passed, then the app was running perfectly. In the past, that would have been a job for 2 weekends.

What is certain?

In view of all the pros and cons on the topic of "are we close to singularity", you wonder what you can now assume with reasonable certainty.

On the one hand, it is certain that for years the capabilities and usefulness of LLMs have been continuously increasing, and that the speed of this increase is itself more likely going up.

On the other hand, it is certain that the current transformer architecture of LLMs can, in principle, hallucinate, and that unverified actions by agents outside a sandbox, that is, agents that change real things in our lives such as booking trips or buying things, is a very bad idea. As is normal with language models, the approach delivers astonishing, positive results in 95% of cases. The 5% disasters are not worth these successes – my own files on my computer’s hard drive are just as dear to me as my money.

So where are we really?

That cannot be said for sure. But I believe that after several years of continuous rapid growth of the LLMs, and with no concrete hard obstacle known to me in sight, it is reasonable to switch the "default".

I like the comparison to a backgammon game: Backgammon is a dice game with a lot of luck and almost as much strategy. At any given time, you can say (especially if you use an AI that plays the game perfectly) who is ahead in a game, how likely it is that I will win this game. But there are moments of huge luck or bad luck: in a hopeless position you roll double sixes twice and win.

It is similar with AIs. It could soon turn out that LLMs hit a hard limit to further growth. It used to be thought that if the collected knowledge of the internet had been used as training material, that point would be reached. That assumption turned out to be wrong. For example, domains like software development were found where you can check whether the LLM result works correctly, because the program either works or doesn’t. These results help to further train the LLM. Likewise, one of the big manufacturers – I would bet on Google, because they are far more broadly positioned in AI than Anthropic and OpenAI – could come up with a brilliant new idea that immediately raises the next AI to a human-like level of intelligence. We don’t know. In backgammon this is called a "joker" and "anti-joker": dice rolls that immediately change the expected outcome.

But if I had to estimate the expected outcome today assuming the game continues calmly, I would probably have to move my forecast of the singularity in 2029 a bit forward, probably to 2028. And then I would be more worried that this estimate is too conservative than too optimistic.

What are the consequences?

Articles like "Something big is happening" should be read thoroughly and reflected upon. In particular, you should not rely on often outdated internet opinions ("haha, can’t even calculate the number of 'e's in 'Erdbeere' haha") or ("it’s all just slop without any use"). I use LLMs every day. Several times. I always keep in mind that, as when I ask humans, there is a small probability that the results are wrong. Accordingly, I check the results more or less depending on how important they are to me. But most of the time they are correct, and AIs dramatically accelerate many of my tasks, giving me completely different possibilities. After about 8 hours of working with ChatGPT I know Unreal Engine quite well, and I was able to develop a small game with 3D animations and C++ code myself. In the past, the mere idea of engaging with something like that would have been absurd if you have a job and a family. Another example: I have written at least 10 small apps that I use every day. That is, I specified them, the AI coded them. Without AIs, far too much effort.

My tip (similar to that of the author of "Something big is happening"): give the LLMs real tasks. Not just "What is the capital of Ghana". Not just counting letters. Something that costs you time in (office) life. Maybe you want to do something with Excel and don’t know how. Maybe you want to manage your photo library. Some things will go wrong (why the hell can’t even Opus 4.6 pass the "find all traffic lights" captcha?), others will blow you away. And not only does this prepare you for a reasonably likely future with superintelligent AIs, it’s also fun.